When I started the now-defunct Steel Hunters (RIP), I wrestled with how to best handle version control for the project. Managing Unreal Engine is easy enough, as Epic has structured its repository to be as git friendly as it can be.

The actual game projects built with Unreal Engine: not so much. Especially when it’s a fairly large-scale, high-fidelity project.

Command Line Work

Before I continue — because it will be critical for the rest of this article — let’s talk about using a command line for work.

Up until about a year or so ago, I rarely ever worked with a command line. It was always a last resort or for dealing with projects that were specifically setup to be managed from the command line. I just like my GUIs. And, as far as coding, I wouldn’t trade Visual Studio for anything (though I now routinely use Visual Studio Code for non-C++ or C# work).

I don’t really know what specifically changed that; maybe it was the OS X terminal or PowerShell that made command line work more palatable. And then I discovered ConEmu which made everything even more user-friendly. And recently, in response to my article about my software recommendations, a reader told me about Cmder which, now, I wouldn’t trade for anything. Unless I found something better.

And now that I’ve gotten used to working within the command line, I couldn’t recommend it strongly enough. And, no, you don’t have to go to an extreme with its use; I would never use a command-line text editor, I don’t use it for all of my tasks and needs, and I certainly would never use it to make an ASCII representation of the Joy Machine logo.

The Joy Machine Logo

He’s so cute.

I also have no memory whatsoever of the gamut of commands that I should know by heart. The variety of flags a given command can take are just things I don’t want to take up space in my brain — especially the PowerShell commands, which follow unconventional standards, are verbose as can be, and, in general, just dumb. The same is true of git commands which, while I always remember the basics, can get so crazy complex that there’s no way I want to — ahem — commit them to memory. But that’s what git aliases are for, but that’s for later. For now, here are some resources on the one of my public GitHub repositories (each subfolder in the scripts root folder has its own README file for details, but I'll TL;DR it here:

OS X Terminal Improvements: An .inputrc file for improving the user experience on OS X terminals. This includes making Ctrl+arrows skip entire words, tab-insensitive command/folder completion, and so on and so forth. The source of this file is a gist from Gregory Nicholas, whom I do not know but greatly appreciate his existence.

PowerShell Scripts — These ones were all me and I adore them. For the most part, they’re a variety of aliases for commands that I’ll never remember but use often. The sample profile, however, also scans the cmdlets subfolder for all self-contained PowerShell scripts (any command with some amount of complexity) and adds it to your toolset whenever you load PowerShell. Yay!

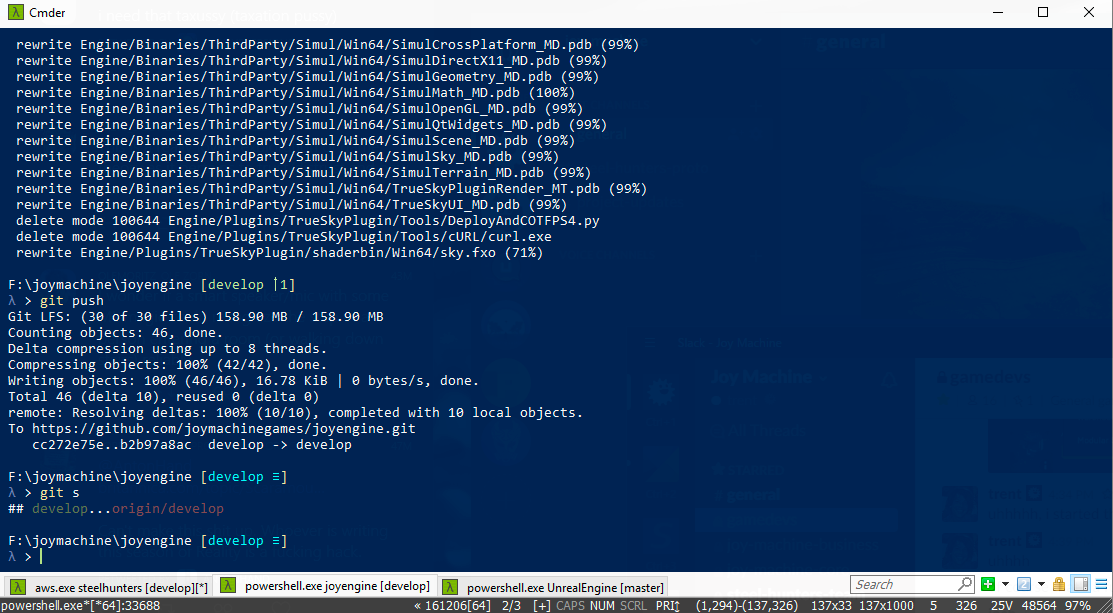

Cmder

We had some good times together old friend.

Steel Hunters Version Control: Iteration One

I like git; it’s a great VCS with an intuitive workflow and command set. That said, Perforce has always been the go-to solution for games as it handles binary files (especially large binary files) incredibly well. I’m not a huge fan of Perforce’s GUI client, but it’s workable. That all said: I like git. And Perforce has Helix4Git, which works with Perforce’s standard version control as well as git. It just maintains two separate databases that it mirrors based on your workflow.

You may have already figured out the issue with this: it mirrors your repository contents on your hard drive. And since I never had the money to upgrade to the desktop computer I spec’d out in January, my storage space on my development laptop is precious.

That quickly made it a no-go.

Steel Hunters Version Control: Iteration Two

Okay, this time we’re going straight-up Perforce. I setup a server on Amazon AWS EC2, installed the necessary Perforce server components and all was right with the world.

Except for the fact that Amazon AWS EC2 server costs add up. Quickly. Especially when you have a project that was, at the time, 100 GB without any local versioning whatsoever. The server-side storage costs increased rapidly.

And since I’m working on Steel Hunters and it brings in negative dollars, I have always had a job (I now have a contract gig or two for moneydollars). I simply did not have time to manage server maintenance on top of everything else. Also: I know nothing about server maintenance, so whenever I needed to do something, I ended up in a google rabbit hole with fifty Chrome tabs open, each of which solved one aspect of a series of problems I was dealing with.

So: no-go.

Steel Hunters Version Control: Iteration Three

git (I refuse to capitalize this for some reason) doesn’t handle binary files incredibly well; especially, it doesn’t handle large binary files well. That’s why git-lfs was introduced, which I started using shortly after it was introduced and found its feature set lacking and its general usability poor.

GitHub, while I love it more than I love most sites on the internet, has a limit on the size of the repositories it supports. It’s rough 2gb; so, I could store source files and key binary content files on it, but it still seemed risky.

So: no-go.

Steel Hunters Version Control: Iteration Four

Someone on Twitter told me about Assembla and it sounded absolutely perfect. git and Perforce support for projects. YES. LIFE WAS COMPLETE. I could store source files on git (which I believe had no size limitations) and content files on Perforce (which had a size limitation, but it worked for me).

When I started adding some contractors to my team — contractors whom, due to some miracle of the universe (or simply being great people), were willing to work for free/deferred payment — this dual-VCS setup was confusing, hard to setup, hard to manage properly, and generally just bad mojo.

This was not aided by some issues I was experiencing with Assembla itself; their Perforce setup was actually fairly difficult to reconcile with how Perforce is generally configured. The server-side git support (including git-LFS which I reluctantly used after being told it was much-improved since I last used it) was not up-to-date with recent releases of git and git-lfs. And this led to some incredibly bizarre errors that were fairly time-consuming and difficult to figure out.

So: no-go.

Steel Hunters Version Control: Iteration Five

I then gave Visual Studio Team Services a shot; it was a git-based VCS and it has no limit on repository size. It is also free unless you want to bolt-on some Azure-based continuous integration or other fanciness. I didn’t believe the lack of size limitations, so I contacted customer support and explained that my project was large. Very large. They said: yup, no limit, don’t worry about. “And it’s free?” “Yes, for your purposes it is completely free of charge.”

I’ve been using VSTS for the last five months without a single issue. Its user management is a complete pain, but that’s such an uncommon task that I just accepted it.

The problem that I ran into a couple days ago was absolutely no fault of VSTS. It was great in every way, minus the variety of tools and utilities and integrations that are entirely reliant on the use of GitHub specifically. I still actively recommend it for mid-sized projects.

The problem was that I kept running out of space on my hard drive. And, since I am not smart, it took me until a couple days ago to finally run a disk drive space analysis tool (WinDirStat) on the disk with my Steel Hunters repository on it.

It totaled 380 GB. Of which 140 GB was the project itself, and 240 GB was the .gitfolder itself.

I spent the next day trying everything I could find on the entire internet to try and prune now-irrelevant large files in the history of the repository using git-based commands, other git-based commands, and even BFG. I think I ended up shaving off about 10–20 GB of data from the .git folder. And when I attempted to push the rewritten history to VSTS it would fail. It would fail every time. And there was no combination of solutions that I could find (including the one on that page) that would cause it not to fail.

Steel Hunters Version Control: Now

I approached this problem from an entirely different perspective yesterday. git and git-lfs remain my favorite form of VCS that doesn’t bring with it the cost of Perforce.

A Quick Aside on git-lfs

git-lfs is now included in the Windows installer for git and that is not made obvious anywhere and can lead to multiple installs of git-lfs on your system.

Where Was I…

I wanted to stick with git and git-lfs and, more than that, I wanted to go back to using GitHub. I bought a few “packs” of git-lfs storage on GitHub, which wasn’t ideal, but it wasn’t going to kill my bank account (on top of another aspect of this plan below) since I’ll just give up a few meals a week. And I came up with a new solution for managing our entire project-base:

Steel Hunters Project — All source code, unique DLLs (those necessary to build aspects of the project), and select content files (game data files and, as of this morning, UMAP files due to the importance of versioning for them despite their large size). Yay, happy Trent. Back on GitHub!

Joy Engine (Unreal Engine 4 Custom Fork) — This too would go on GitHub, because it was never a problem child in the first place.

Steel Hunters Source Asset Files — This repository is for managing the source content files that are imported into the Steel Hunters UE4 project. This includes files like PNGs, TGAs, PSDs, FBXs, and so on and so forth. These files are more critical to the project than any UASSET in the game project, which is why I sprung for the additional git-lfs storage packs, as I want them versioned. They aren’t as numerous and heavy as the game project’s content folder, as that folder contains a number of Unreal Engine asset packages that I don’t generally care about exporting the source files of, save special circumstances.

Steel Hunters Project Content Folder

This was the elephants-stacked-on-elephants-stacked-on-giant-cubes-of-solid-lead of the whole dilemma. In general: most of these content files do not change with any frequency. And, if they do change, it’s generally a fairly trivial alteration of a flag, property, or minor edits. Since I always note what changes I make in fair detail in my git commit messages, if anything ever went bad, I could really just read back in the commit history and re-make the changes if need be (through downloading the original asset package or re-importing the source the file).

The solution I ended up with is this: an Amazon AWS S3 bucket for syncing the Steel Hunters Content directory to whenever any files are changed. This folder is managed through a tool that monitors a folder for any modifications and syncs them to the S3 bucket.

Is this absolutely ideal? No. It is, however, the most time-effective way for me to maintain the project until it gets funded properly and I can bring on a team and someone with more experience than me in managing servers (which would make switching to Perforce a viable option, if the need arises).

Since this whole plan was put into effect yesterday, time will tell how well everything works out.

Working with git Like a Rockstar

I don’t pretend to be a git expert, but at this point I’ve gotten so comfortable with my command line-based workflow and built up a fair amount of shortcuts, tools, and utilities to make my day-to-day work effortless. And I don’t keep those a secret.

But first: git GUI applications are universally bad. All of them lack the full set of functionality that you really want for properly managing a repository, for one. And some of the applications themselves are just… bad.

SourceTree, for instance, should be avoided at all costs. I’ve found it buggy, slow, completely lacking the ability to handle large projects, and just a general disappointment. I’m not even going to link it nor give it bold text. That’s what I think of it.

GitKraken will be good some day. It’s just not there yet. It has issues with large projects, its interface, while pretty, is non-intuitive, and it’s lacking some very necessary features for properly managing a repository. As I said, though: I think it’s promising.

GitHub Desktop is an electron app I just found last night and… I like it? I wouldn’t use it for management of a repository or anything, but if I ever want to quickly analyze all of the various local repositories on my computer, I can open it, see some quick diffs, and then close it.

Tower is thoroughly decent, but I’ve encountered a fair amount of issues with it when working at scale.

That said: GUIs for performing diff and merge operations are absolutely crucial. And while P4Merge gets the job done adequately, what you really want is Beyond Compare. Just… trust me. For one thing, Beyond Compare not only does diff and merge operations on git repositories, but it can also do folder/file diff and merge operations even on things that aren’t managed by any sort of version control. It’s wonderful.

NOTE: This is an archive article that I’m updating; the information here may be out of date. GitHub Desktop, for instance, is pretty good. And I’m looking at giving GitKraken another go in a few minutes.

But Just Work with the Command Line (Except the diff/merge Thing)

I really can’t emphasize how useful the git command line is (by the way, when you install on Windows, ensure you install both bash and standard Windows compatibility). It’s powerful, it gives you all the information you need, it does a better job of informing you what is and isn’t managed by git-lfs, it lets you easily pick-and-choose what you include in a given commit, and so on and so forth.

That doesn’t mean it’ll be instantly obvious how to work with it, but that’s why I have some handy files on my repo’s git folder to help out:

[README](https://github.com/trentpolack/joymachine-public/blob/master/git/README.md) — Some general information about what the templates do as well as some general git information to help out.

[gitconfig](https://github.com/trentpolack/joymachine-public/blob/master/git/gitconfig.template) — A whole lot of aliases that make common operations a bit quicker to execute as well as aliases for operations that are not at all trivial to execute. git state, git lard, git prettylog, and git last are my favorites.

Also: I added output colors for some git operations. It was necessary.

[gitattributes](https://github.com/trentpolack/joymachine-public/blob/master/git/gitattributes.template) — Rules for files that I absolutely want stored as text (CPP/H/CS) and some general rules for files I want managed by git-lfs.

[gitignore](https://github.com/trentpolack/joymachine-public/blob/master/git/gitignore.template) — A list of files and extensions that I commonly ignore (focused on UE4 projects).

Conclusion

And that’s my story about version control. fin