Multiple-Choice Reasoning in LLMs

Have you ever wondered how language models can ace in answering multiple-choice questions just like a human does? This article introduces the Multi-Modal Process of Elimination (MM-PoE) — a novel, two-step approach that helps vision-language models rule out wrong answers before zeroing in on the best one. Think of it as giving language models a clever test-taking strategy that not only boosts their visual reasoning skills but also makes them more effective.

Whether you’re just diving into AI and ML or you’re a seasoned data scientist, you’ll find that this method tackles one of the key challenges in visual reasoning by moving beyond the usual zero-shot language-only frameworks. Additionally, it even shines in few-shot settings — proving its versatility in handling multi-modal reasoning tasks.

How MM-PoE works?

Multi-Modal Process of Elimination (MM-PoE) operates on a two-step mechanism designed to enhance the decision-making capabilities of vision language models (VLMs) in multiple-choice visual reasoning tasks. This method employs a novel approach of option elimination followed by a focused prediction phase. The strategy is rooted in the belief that separating the elimination of clearly incorrect options from the choice of the best remaining options will improve overall task performance.

Formulating the problem

Given a multiple-choice visual reasoning task, we define the problem setting as follows:

- Let x be the question or context provided.

- Let h be the image provided.

- Let Y= {y_1,y_2, …,y_n} be the set of multiple-choice options available.

- Let y be the correct answer from Y.

The goal is to develop an in-context learning method that accurately selects y from Y given x and h.

Two-Step Scoring Method

Step 1: Elimination

In the first step of the MM-PoE method, each option is scored based on a specified metric. The score function, score(x,h,y_i), evaluates each option’s plausibility given the question x and image h. The scores are used to eliminate options that are deemed less likely to be correct. Specifically, options whose scores are below the average score are eliminated. This is calculated as follows:

- s = score(x,h,y_i)

- Y_wrong = {y_i | s_i < avg(s_1, s_2, …, s_n) }

This elimination strategy intuitively aligns with how humans often discard options that seem incorrect before carefully considering the remaining choices.

Step 2: Prediction

The second step involves making the final choice from the non-eliminated options. This step utilizes a binary mask to exclude the eliminated options during the prediction phase. The mask for each option y_i is defined as follows:

- m_i = 0 if y_i belongs to Y_wrong otherwise 1

The masked context x_mask is then constructed by modifying the original context to include only the options for which m_i =1. Each option is scored again, but this time within the context that explicitly excludes the eliminated options, possibly by using a template T that masks out Y_wrong in the remaining available options:

The final predicted answer y' is then the option with the highest score among the remaining options:

- y' = argmax_{i | m_i =1} [score(x_mask, h, y_i)]

Evaluation Strategy

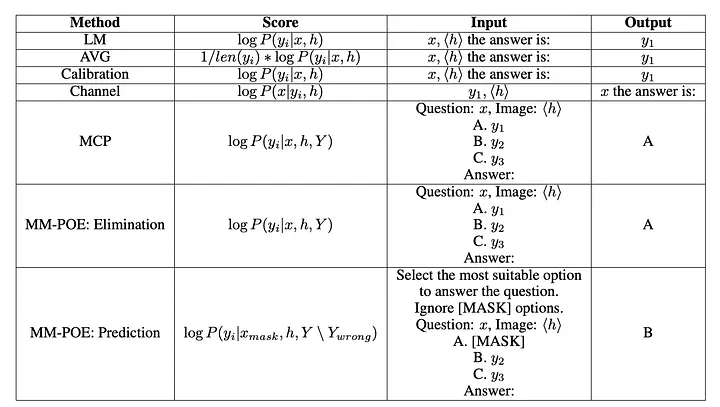

To show off how MM-PoE stands out, we put it head-to-head with five popular baseline scoring methods. Here’s a quick overview of the baseline scoring strategies:

- Language Modeling (LM): Think of this as the “vanilla” approach — using raw vision-language modeling likelihood as the scoring function.

- Average Language Modeling (AVG): Instead of a single score, this method averages the log probabilities across all tokens in each option, giving a more balanced view.

- Calibration: Here, the scores are fine-tuned with calibration techniques to adjust for the model’s confidence.

- Channel: This method flips the usual approach by scoring each option based on how likely the question is, given the option. It’s a clever twist on traditional conditional probabilities.

- Multiple Choice Prompting (MCP): By presenting the question alongside all possible answers in one go, this approach encourages the model to pick the most likely option right off the bat.

Each of these methods brings its unique flavor to the table, but MM-PoE takes visual reasoning to the next level.

MM-PoE in Action

Here, the application will prompt the user to provide relevant inputs for a multiple-choice question e.g. a question, multiple answer choices for the question, and the path to the image relevant to the question context. Once the inputs are provided, the predicted answer will be displayed based on prompt outputs.

ScienceQA Example

Question: Which of these states is farthest north?

Options: West Virginia, Louisiana, Arizona, Oklahoma

Ground Truth Option: West Virginia

Predicted Masks: West Virginia, Louisiana, [MASK], [MASK]

Predicted Option: West Virginia

AI2D Example

Question: Are phytoplankton predators or prey in this food chain?

Options: producer, predator, prey, NA

Ground Truth Option: prey

Predicted Masks: [MASK], predator, prey, NA

Predicted Option: prey

Conclusion

By mimicking a smart test-taker who first eliminates wrong answers, it provides significant boosts to vision-language models in both zero-shot and few-shot settings. This new, human-inspired approach not only refines visual reasoning but also opens doors to exciting applications like medical imaging and scientific discovery. Get ready to see AI think a little more like us!

Resources

Curious to see how this works in practice? Check out our linked software and the full paper below. Join us as we explore the next frontier of AI-driven visual reasoning!